Breathing New Life into 3D Assets with Generative Repainting

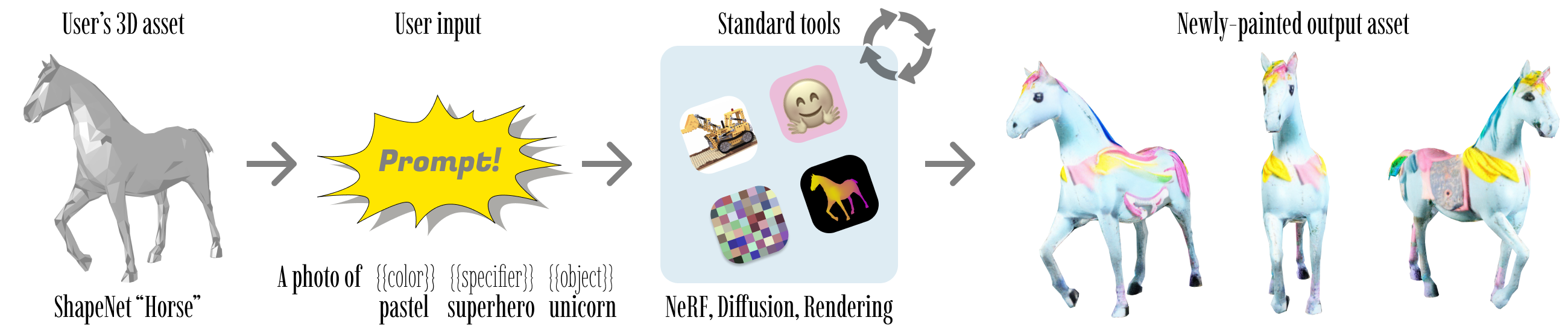

TLDR; Our method lifts the power of generative 2D image models, such as Stable Diffusion, into 3D.

Using them as a way to synthesize unseen content for novel views, and NeRF to reconcile all generated views,

our method provides vivid painting of the input 3D assets in a variety of shape categories.

Check out the interactive demo below with select objects from the ShapeNetSem dataset, and compare results by different methods!

Featured in

- 🦄 Oral talk at BMVC 2023 (The 34-th British Machine Vision Conference)

- ICCV 2023 Workshop (AI for 3D Content Creation)

Interactive Viewer

Check out the interactive demo with select objects from the ShapeNetSem dataset painted by different

methods! Keep in mind that each input can be iterated upon and painted in numerous ways by varying the

prompt text and algorithm seed value; we only demonstrate one painting.

- Click "Menu" to access or collapse model and algorithm selection controls.

- Use the mouse or gestures to control the model, and slider for a better focus on one of the views.

Interactive Viewer Usage

Check out ways of interacting with the model viewer below.

HuggingFace Spaces Demo

Paper

Abstract:

Diffusion-based text-to-image models ignited immense attention from the

vision community, artists, and content creators. Broad adoption of these models

is due to significant improvement in the quality of generations and efficient

conditioning on various modalities, not just text. However, lifting the rich

generative priors of these 2D models into 3D is challenging. Recent works have

proposed various pipelines powered by the entanglement of diffusion models and

neural fields. We explore the power of pretrained 2D diffusion models and

standard 3D neural radiance fields as independent, standalone tools and

demonstrate their ability to work together in a non-learned fashion. Such

modularity has the intrinsic advantage of eased partial upgrades, which became

an important property in such a fast-paced domain. Our pipeline accepts any

legacy renderable geometry, such as textured or untextured meshes, orchestrates

the interaction between 2D generative refinement and 3D consistency enforcement

tools, and outputs a painted input geometry in several formats. We conduct a

large-scale study on a wide range of objects and categories from the

ShapeNetSem dataset and demonstrate the advantages of our approach, both

qualitatively and quantitatively.

Read more in the latest version of the paper:

Video

Large-Scale Comparison of ShapeNetSem Texturing with the original textures, Latent-Paint, TEXTure, and our

method. We present spin-views of ∼12K models from over 270 categories. The models are grouped by category

and sorted by group size. Categories, IDs, and model names (prompts) are specified under the corresponding

video tiles. Tip: Use timecodes to conveniently skip to categories of interest.

Source code

-

: The official repository of this project.

-

: NeRF-to-mesh export functionality, powering the demo above.

-

: Generative Metrics evaluation toolkit.

-

: The official colab of this project - start playing with the method now!

-

: Use our prepared Docker image to run the code without hassle.

to receive updates about this and other research I am involved in!

Citation

Please support our research by citing our paper:

@inproceedings{wang2023breathing,

title={Breathing New Life into 3D Assets with Generative Repainting},

author={Wang, Tianfu and Kanakis, Menelaos and Schindler, Konrad and Van Gool, Luc and Obukhov, Anton},

booktitle={Proceedings of the British Machine Vision Conference (BMVC)},

year={2023},

publisher={BMVA Press}

}